Opticks + JUNO : Deploy GPU accelerated MC simulation ?

Opticks + JUNO : Deploy GPU accelerated MC production ?

Can Dirac(X) help ?

Open source, https://github.com/simoncblyth/opticks

Simon C Blyth, IHEP, CAS — The 11th DIRAC Users Workshop, IHEP — (19 September 2025)

Outline

- Optical Photon Simulation : Context and Problem

- (JUNO) Optical Photon Simulation Problem...

- Optical Photon Simulation ≈ Ray Traced Image Rendering

- NVIDIA RTX Generations 1=>4: RT performance : ~2x every ~2 years

- Opticks Optical simulation 4x faster 1st->3rd gen RTX

- NVIDIA OptiX : Ray Tracing Engine

- Photons from muon crossing JUNO Scintillator

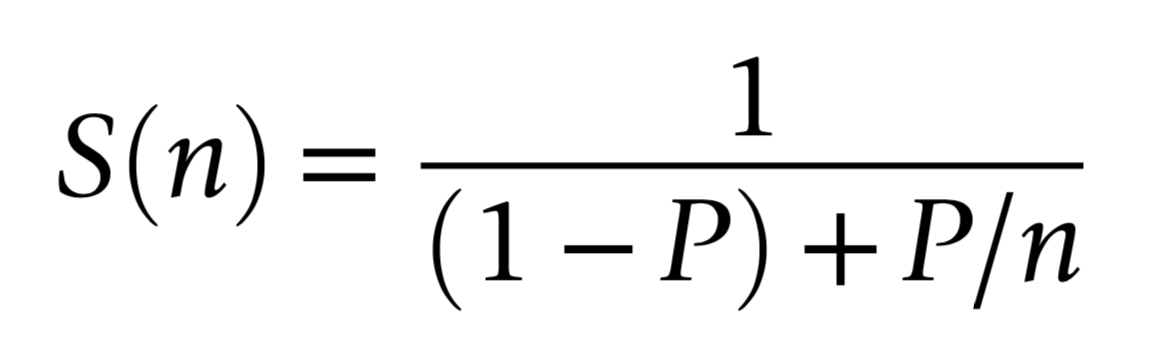

- Parallelized speedup

- Scaling Opticks Solution to Optical Photon Simulation Problem

- Geant4 + Opticks + NVIDIA OptiX : Hybrid Workflow

- Geant4 + Opticks + NVIDIA OptiX : Hybrid Workflow 2x2

- Geant4 + Opticks + NVIDIA OptiX : Hybrid Workflow 4x4

- OpticksClients + OpticksService : Split the Monolith

- Split Workflow : Share GPUs between OpticksClients

- Opticks MC production monolithic deployment with Dirac(X) ?

- Opticks MC production server/client deployment with Dirac(X) ?

- Summary + Links

(JUNO) Optical Photon Simulation Problem...

Huge CPU Memory+Time Expense

- JUNO Muon Simulation Bottleneck

- ~99% CPU time, memory constraints

- Ray-Geometry intersection Dominates

- simulation is not alone in this problem...

- Optical photons : naturally parallel, simple :

- produced by Cherenkov+Scintillation

- yield only Photomultiplier hits

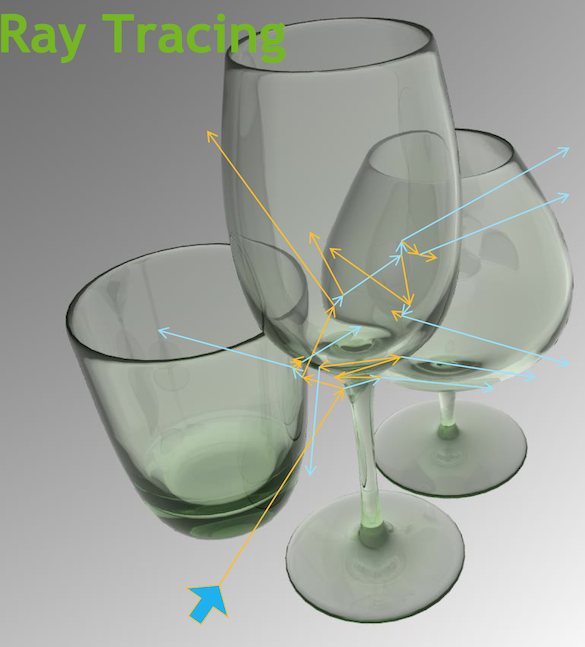

Optical Photon Simulation ≈ Ray Traced Image Rendering

Not a Photo, a Calculation

- simulation

- photon parameters at sensors (PMTs)

- rendering

- pixel values at image plane

Much in common : geometry, light sources, optical physics

- both limited by ray geometry intersection, aka ray tracing

Many Applications of ray tracing :

- advertising, design, architecture, films, games,...

- -> huge efforts to improve hw+sw over 30 yrs

NVIDIA RTX Generations 1=>4

- RT Core : ray trace dedicated GPU hardware

- Each gen : doubled ray tracing speed:

- Blackwell (2025) ~2x ray trace over Ada

- Ada (2022) ~2x ray trace over Ampere

- Ampere (2020) ~2x ray trace over Turing (2018)

- NVIDIA Blackwell 4th Gen RTX : released 2025/01

- ray trace performance : ~2x every ~2 years

- Opticks optical speed directly scales with RT speed

AB_Substamp_ALL_Etime_vs_Photon_rtx_gen1_gen3.png

| PH(M) | G1 | G3 | G1/G3 |

|---|---|---|---|

| 1 | 0.47 | 0.14 | 3.28 |

| 10 | 0.44 | 0.13 | 3.48 |

| 20 | 4.39 | 1.10 | 3.99 |

| 30 | 8.87 | 2.26 | 3.93 |

| 40 | 13.29 | 3.38 | 3.93 |

| 50 | 18.13 | 4.49 | 4.03 |

| 60 | 22.64 | 5.70 | 3.97 |

| 70 | 27.31 | 6.78 | 4.03 |

| 80 | 32.24 | 7.99 | 4.03 |

| 90 | 37.92 | 9.33 | 4.06 |

| 100 | 41.93 | 10.42 | 4.03 |

Optical simulation 4x faster 1st->3rd gen RTX, (3rd gen, Ada : 100M photons simulated in 10 seconds) [TMM PMT model]

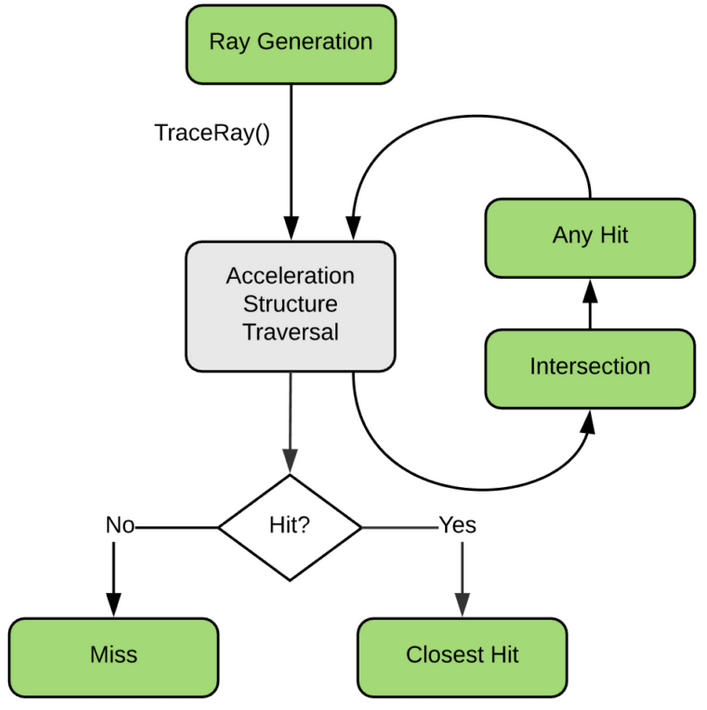

NVIDIA® OptiX™ Ray Tracing Engine -- Accessible GPU Ray Tracing

Flexible Ray Tracing Pipeline

Green: User Programs, Grey: Fixed function/HW

Analogous to OpenGL rasterization pipeline

OptiX makes GPU ray tracing accessible

- Programmable GPU-accelerated Ray-Tracing Pipeline

- Single-ray shader programming model using CUDA

- ray tracing acceleration using RT Cores (RTX GPUs)

- "...free to use within any application..."

OptiX features

- acceleration structure creation + traversal (eg BVH)

- instanced sharing of geometry + acceleration structures

- compiler optimized for GPU ray tracing

User provides (Green):

- ray generation

- geometry bounding boxes

- intersect functions

- instance transforms

Latest Release : NVIDIA® OptiX™ 9.0.0 (Feb 2025)

- NVIDIA R570 driver or newer

- EACH RELEASE, NEWER MINIMUM DRIVER

GEOM_J25_4_0_opticks_Debug_cxr_min_muon_cxs_20250707_112242.png

EVT=muon_cxs cxr_min.sh #12 : photons from muon crossing JUNO Scintillator

GEOM_J25_4_0_opticks_Debug_cxr_min_muon_cxs_20250707_112243.png

EVT=muon_cxs cxr_min.sh #13

GEOM_J25_4_0_opticks_Debug_cxr_min_muon_cxs_20250707_112244.png

EVT=muon_cxs cxr_min.sh #14

amdahl_p_sensitive.png

Geant4 + Opticks + NVIDIA OptiX : Hybrid Workflow

| https://bitbucket.org/simoncblyth/opticks |

Opticks API : split according to dependency -- Optical photons are GPU "resident", only hits need to be copied to CPU memory

Geant4 + Opticks + NVIDIA OptiX : Hybrid Workflow 2x2 ?

Geant4 + Opticks + NVIDIA OptiX : Hybrid Workflow 2x2?

Geant4 + Opticks + NVIDIA OptiX : Hybrid Workflow 4x4 ?

Geant4 + Opticks + NVIDIA OptiX : Hybrid Workflow 4x4?

"Monolithic" scaling : very inefficient use of scarce GPU resources

OpticksClients + OpticksService : Share GPUs

Client.png

Split Workflow : Share GPUs between OpticksClients

- OpticksClient : Detector Simulation Framework (Geant4 etc..) GPU

- U4.h : collect gensteps

- NP_CURL.h : HTTP POST (libcurl)

- request : genstep array

- response : hits array

- OpticksService : CSGOptiX + NVIDIA OptiX + GPU

- FastAPI : ASGI python web framework (alt: sanic, aiohttp) grandmetric.com/python-rest-frameworks-performance-comparison/

- nanobind : python <=> C++ (alt: pybind11), uv : pip

- CSGFoundry.h : load persisted geometry

- CSGOptiXService.h : simulate

- Prototype clients + service under development

- scale up to MC production ? ~100/1000 clients ?

- use multi-GPU to serve more clients ?

- OR C++ web framework eg:

- binding, less standard

Opticks MC production monolithic deployment with Dirac(X) ? Require:

- NVIDIA GPU (5~10x performance benefit with RTX GPUs)

- docker container support : https://hub.docker.com/r/simoncblyth/cuda/tags

- NVIDIA Container Toolkit

- Minimum NVIDIA Driver version requirement of NVIDIA OptiX

- NVIDIA OptiX : implementation is within Driver

NVIDIA GPU resources : expensive, high demand, difficult to fully utilize

Dirac(X) matching job to resources : Are Dirac "tags" expressive enough ?

- requirement for NVIDIA GPU

- mandatory NVIDIA Driver version >= ?

- GPU type order of preference (eg RTX generation "0",1,2,3,..) ?

- GPU VRAM requirement, min/max ?

GPU workloads becoming ubiquitous, others will have similar needs

Opticks MC production Server/Client deployment with Dirac(X) ?

GPU-less OpticksClients : Geant4 + JUNOSW + libcurl : HTTP POST to OpticksService

Restrictions/Quotas ?

- HTTP POST request/response jobs<->server

- ~100 MB of network traffic per simulated event

- gensteps (~10k,6,4) float [~1 MB]

- hits ( ~1M, 4, 4) float [64 MB]

- proxy to avoid blocks ? (libcurl very mature)

- complications : authentication, authorization, accounting

- Scale Opticks GPU optical photon simulation to large MC productions, with efficient GPU use

- benefit from open source packages/examples with similar requirements

Summary and Links

Extra Benefits of Adopting Opticks

- high performance novel visualization

- detailed photon instrumentation, validation

- comparisons find issues with both simulations:

- complex geometry, overlaps, bugs...

=> using Opticks improves CPU simulation too !!

Opticks : state-of-the-art GPU ray traced optical simulation integrated with Geant4, with automated geometry translation.

GPU-less OpticksClient + OpticksService in development, bringing Opticks everywhere + improving GPU utilization.

- NVIDIA Ray Trace Performance continues rapid progress (2x each gen., every ~2 yrs)

- any simulation limited by optical photons can benefit from Opticks

- more photon limited -> more overall speedup (99% -> ~90x)

| https://bitbucket.org/simoncblyth/opticks | day-to-day code repository |

| https://simoncblyth.bitbucket.io | presentations and videos |

| https://groups.io/g/opticks | forum/mailing list archive |

| email: opticks+subscribe@groups.io | subscribe to mailing list |

| simon.c.blyth@gmail.com | any questions |